Findings #4

Welcome back to another week. There was a lot happening this past week, including the much-discussed purchase of Twitter. One of the promises I found particularly interesting was the promise to open source the timeline algorithm. More on that later.

From the web

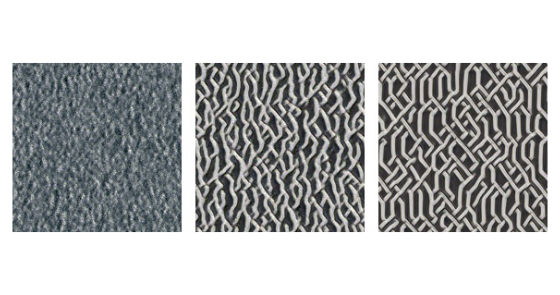

I found myself back on a post from 2021 about self-organising textures. This is an AI setup using small automata to generate complex patterns. Each cell knows only about itself and a small surrounding area, but it can exhibit some fascinating behaviour at scale.

On a similar thread, we get back to open-sourcing the Twitter timeline algorithm. Having built recommendation type systems, I know how the sausage is made. Under the hood of any AI like this is many moving parts. Each one tuned through A/B testing and small teams to drive some loss function. Those things come together, much like the self-organising textures, to create the complex behaviour of what ends up on your screen.

I think any effort to open source might fall short of general expectations. We can inspect the code, maybe even the weights, but unless you let the system run and test it with data, you won't fully grasp what it means.

For more on that, Travis Fischer wrote up some thoughts on what open-sourcing the algorithm would look like.

From me

Tom is over in the USA this week so there was no podcast episode. We'll record the next one later this week so look out for that in the next edition.

I published an essay this week on collaborative AI as a path to general artificial intelligence. If you read the posts above it's worth reading in the context of small units working together to exhibit complex behaviour.

Final thought

A new section this week. An idea or two that are not yet formed enough for an essay.

Is there a limit to the size of 'ideas'? At least in so far as we can comprehend them. Are we drawn to simple answers because they are correct or because complexity is hard to parse?

If you enjoyed this, consider subscribing to get new editions and essays in your inbox each week. I try to keep these short and informative and you can opt out any time.